An overview of GP-GPU Architecture

Guide: Prof. Virendra Singh

I worked on this project as part of a Summer Project with Prof. Virendra Singh. I had been tasked with understanding and implementing an Adaptive decoupled last-level cache design for general-purpose GPUs. Since GPUs were a new topic for me at the time, I studied and reviewed the SIMT core, memory systems and the programming model related to GP-GPU architecture. I also reviewed literature about leveraging Adaptive last-level caching and implementing it on the GPGPU-Sim simulator. I also performed various benchamrk simulations on the simulator and carefully analyzed the outputs received.

Report

References

2019

-

Adaptive Memory-Side Last-Level GPU Caching

Xia Zhao, Almutaz Adileh, Zhibin Yu, and 3 more authors

In Proceedings of the 46th International Symposium on Computer Architecture, Aug 2019

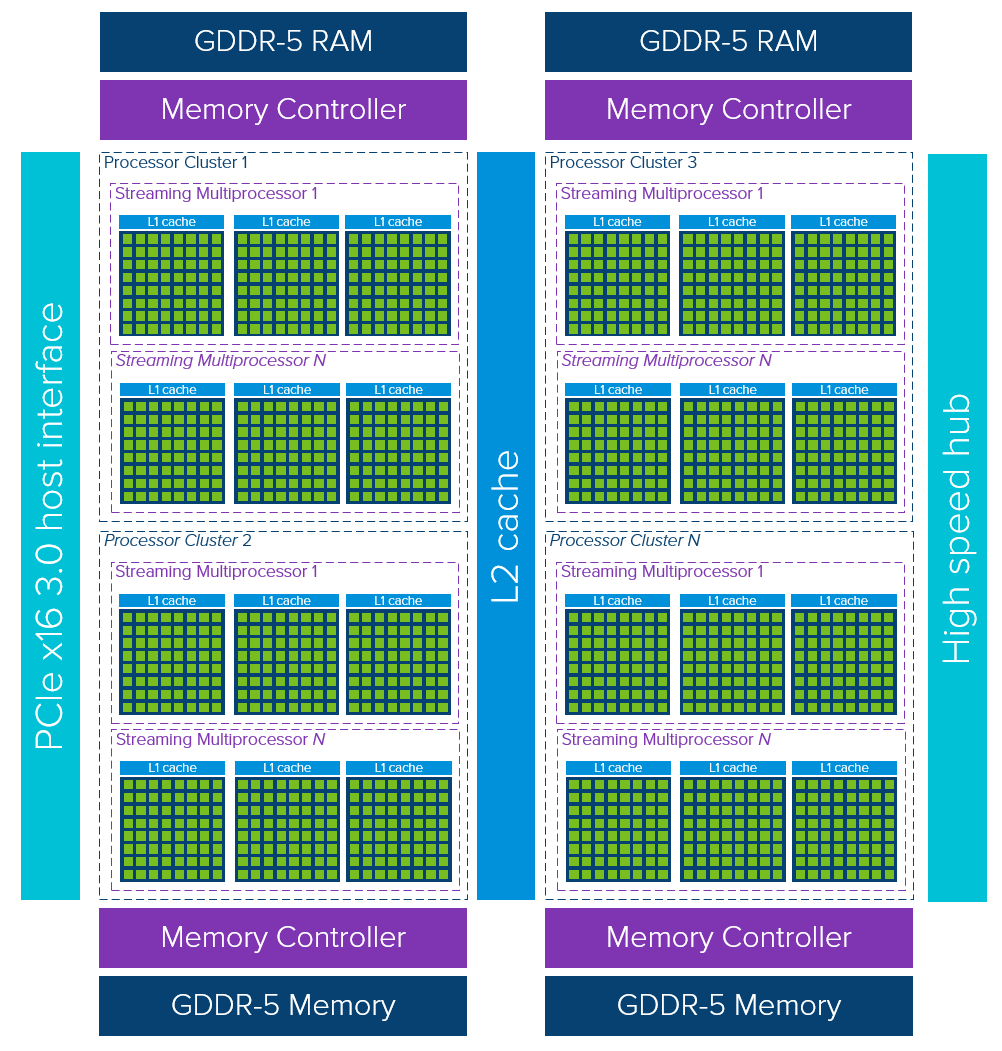

Emerging GPU applications exhibit increasingly high computation demands which has led GPU manufacturers to build GPUs with an increasingly large number of streaming multiprocessors (SMs). Providing data to the SMs at high bandwidth puts significant pressure on the memory hierarchy and the Network-on-Chip (NoC). Current GPUs typically partition the memory-side last-level cache (LLC) in equally-sized slices that are shared by all SMs. Although a shared LLC typically results in a lower miss rate, we find that for workloads with high degrees of data sharing across SMs, a private LLC leads to a significant performance advantage because of increased bandwidth to replicated cache lines across different LLC slices.In this paper, we propose adaptive memory-side last-level GPU caching to boost performance for sharing-intensive workloads that need high bandwidth to read-only shared data. Adaptive caching leverages a lightweight performance model that balances increased LLC bandwidth against increased miss rate under private caching. In addition to improving performance for sharing-intensive workloads, adaptive caching also saves energy in a (co-designed) hierarchical two-stage crossbar NoC by power-gating and bypassing the second stage if the LLC is configured as a private cache. Our experimental results using 17 GPU workloads show that adaptive caching improves performance by 28.1% on average (up to 38.1%) compared to a shared LLC for sharing-intensive workloads. In addition, adaptive caching reduces NoC energy by 26.6% on average (up to 29.7%) and total system energy by 6.1% on average (up to 27.2%) when configured as a private cache. Finally, we demonstrate through a GPU NoC design space exploration that a hierarchical two-stage crossbar is both more power- and area-efficient than full and concentrated crossbars with the same bisection bandwidth, thus providing a low-cost cooperative solution to exploit workload sharing behavior in memory-side last-level caches.